Simple descriptions of the Scientific Method typically run like this:

- Collect data

- Look for patterns

- Form hypotheses

- Gather more data

- Weed out the hypotheses that don’t fit the data

- Whatever survives is the truth

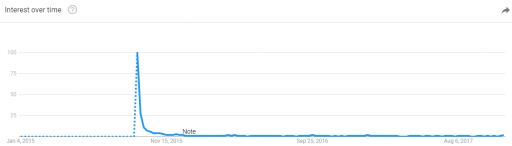

There’s obviously more to it than that, but every popular description I’ve seen leaves out one crucial aspect. Frequently, when the hypothesis doesn’t fit the data, it’s the data that’s wrong. This is not an invitation to cherry pick your data; it’s just recognition of a basic problem, particularly in social and business systems.

Any time you are building an integrated systems model, it’s likely that you will have to rely on data from a variety of sources, with differences in granularity, time horizons, and interpretation. Those data streams have probably never been combined before, and therefore they haven’t been properly vetted. They’re almost certain to have problems. If you’re only looking for problems with your hypothesis, you’re at risk of throwing the good model baby out with the bad data bathwater.

The underlying insight is that data is not really distinct from models; it comes from processes that are full of implicit models. Even “simple” measurements like temperature are really complex and assumption-laden, but at least we can easily calibrate thermometers and agree on the definition and scale of Kelvin. This is not always the case for organizational data.

A winning approach, therefore, is to pursue every lead:

- Is the model wrong?

- Does it pass or fail extreme conditions tests, conservation laws, and other reality checks?

- How exactly does it miss following the data, systematically?

- What feedbacks might explain the shortcomings?

- Is the data wrong?

- Do sources agree?

- Does it mean what people think it means?

- Are temporal patterns dynamically plausible?

- If the model doesn’t fit the data, which is to blame?

When you’re building a systems model, it’s likely that you’re a pioneer in uncharted territory, and therefore you’ll learn something new and valuable either way.