From some interesting discussions with Angie Moon, a first cut at a taxonomy of parameters in SD models, with their fates:

Can AI do SD?

There are lots of interesting developments in machine learning for time series modeling, but can an AI write about systems sensibly? I decided to try a few free online AI content generators.

Using system dynamics modeling for project delay and disruption

1. System Dynamics Modeling (SDM)

System dynamics modeling is a mathematical model that helps us understand how systems work and how they interact with each other. SDM is useful for understanding complex systems and their behavior over time. In addition, it can help predict future outcomes based on current conditions.

2. Project Delay & Disruption

Project delays and disruptions occur frequently in projects. These events cause significant problems for organizations and individuals alike. A project delay occurs when a project is delayed past its original completion date. A project disruption occurs when a project is disrupted before it reaches its original completion date. Both types of project delays and disruptions can have negative effects on the success of a project.

3. Causes of Project Delays & Disruptions

There are many causes of project delays and disruptions. Some of these causes include:

• Poor planning

• Unclear requirements

• Lack of communication

• Poor management

• Miscommunication

• Poor leadership

• Poor team coordination

• Poor resource allocation

Well, we didn’t get the rework cycle or any feedback effects, but not an entirely useless effort.

Do climate tipping points signal the onset of Limits to Growth?

Climate change is a serious human problem that causes concern among many people. Some believe that climate change is caused by human activity and that it will bring about a catastrophic future. However, others believe that it is natural and that there are ways to prevent it from causing further damage. Regardless of whether climate change is caused by human activity or not, it has the potential to cause major damage to the planet. Many believe that we are approaching climate tipping points, which could have catastrophic consequences for humanity.

One reason why climate tipping points signal the onset of limits to growth is because they are a harbinger of future environmental disasters. These disasters would occur because of the way we have altered the climate and what we have done to the planet. For example, the Larsen B ice shelf in Antarctica broke away in 2002 and disintegrated within four years due to global warming. Climate tipping points signal the onset of limits to growth because they are a harbinger of future environmental disasters. Governments and environmental groups should prepare for future environmental disasters by investing in renewable resources and making changes in agriculture to reduce food insecurity.

Another reason why climate tipping points signal the onset of limits to growth is because they are a harbinger of future economic problems. Scientists have warned us about manmade climate change for years; however, most people ignore their warnings and keep on using fossil fuels anyway. This is causing major problems for our planet, including droughts, rising sea levels and more frequent natural disasters. Unfortunately, many people are paying little attention to the warnings; they think they can ignore them and avoid problems later. Governments and environmental groups should prepare for future economic problems by investing in renewable resources and making changes in agriculture to reduce food insecurity.

I have to say, the AI is making more sense than the South Dakota legislature.

This is a case of a deer that had been found dead by an early hunter, then found by another hunter on the same day. These hunters were in the woods for several days, hunting deer. Then, for a week or more, the deer had eaten enough grass to cover itself, leaving the deer lying in its own feces. In the United States, this is called wasting. Chronic wasting is an infectious disease that, in humans, is caused by bacteria. When a person is infected with chronic wasting, they begin losing weight and become weak. They begin to smell bad, have sores on their body, lose their appetite, feel tired, listless and weak, having trouble standing up. Some people lose consciousness. Their physical…

I guess we still need modelers for some things.

Believing Exponential Growth

Verghese: You were prescient about the shape of the BA.5 variant and how that might look a couple of months before we saw it. What does your crystal ball show of what we can expect in the United Kingdom and the United States in terms of variants that have not yet emerged?

Pagel: The other thing that strikes me is that people still haven’t understood exponential growth 2.5 years in. With the BA.5 or BA.3 before it, or the first Omicron before that, people say, oh, how did you know? Well, it was doubling every week, and I projected forward. Then in 8 weeks, it’s dominant.

It’s not that hard. It’s just that people don’t believe it. Somehow people think, oh, well, it can’t happen. But what exactly is going to stop it? You have to have a mechanism to stop exponential growth at the moment when enough people have immunity. The moment doesn’t last very long, and then you get these repeated waves.

You have to have a mechanism that will stop it evolving, and I don’t see that. We’re not doing anything different to what we were doing a year ago or 6 months ago. So yes, it’s still evolving. There are still new variants shooting up all the time.

At the moment, none of these look devastating; we probably have at least 6 weeks’ breathing space. But another variant will come because I can’t see that we’re doing anything to stop it.

Data & Uncertainty in SD – Health Policy SIG Presentation

Reading Between the Lines on Forrester’s Perspective on Data

I like Jay Forrester’s “Next 50 Years” reflection, except for his perspective on data:

I believe that fitting curves to past system data can be misleading.

OK, I’ll grant that fitting “curves” – as in simple regressions – may be a waste of time, but that’s a bit of a strawdog. The interesting questions are about fitting good dynamic models that pass all the usual structural tests as well as fitting data.

Also, the mere act of fitting a simple model doesn’t mislead; the mistake is believing the model. Simple fits can be extremely useful for exploratory analysis, even if you later discard the theories they imply.

Having a model give results that fit past data curves may impress a client.

True, though perhaps this is not the client you’d hope to have.

However, given a model with enough parameters to manipulate, one can cause any model to trace a set of past data curves.

This is Von Neumann’s elephant. He’s right, but I roll my eyes every time I hear this repeated – it’s a true but useless statement, like all models are wrong. Nonlinear dynamic models that pass SD quality checks usually don’t have anywhere near the degrees of freedom needed to reproduce arbitrary behaviors.

Doing so does not give greater assurance that the model contains the structure that is causing behavior in the real system.

On the other hand, if the model can’t fit the data, why would you think it does contain the structure that is causing the behavior in the real system?

Furthermore, the particular curves of past history are only a special case. The historical curves show how the system responded to one particular combination of random events impinging on the system. If the real system could be rerun, but with a different random environment, the data curves would be different even though the system under study and its essential dynamic character are the same.

This is certainly true. However, the problem is that the particular curve of history is the only one we have access to. Every other description of behavior we might use to test the model is intuitively stylized – and we all know how reliable intuition in complex systems can be, right?

Exactly matching a historical time series is a weak indicator of model usefulness.

Definitely.

One must be alert to the possibility that adjusting model parameters to force a fit to history may push those parameters outside of plausible values as judged by other available information.

This problem is easily managed by assigning strong priors to known parameters in the model calibration process.

Historical data is valuable in showing the characteristic behavior of the real system and a modeler should aspire to have a model that shows the same kind of behavior. For example, business cycle studies reveal a large amount of information about the average lead and lag relationships among variables. A business-cycle model should show similar average relative timing. We should not want the model to exactly recreate a sample of history but rather that it exhibit the kinds of behavior being experienced in the real system.

As above, how do we know what kinds of behavior are being experienced, if we only have access to one particular history? I think this comment implies the existence of intuitive data from other exemplars of the same system. If that’s true, perhaps we should codify those as reference modes and treat them like data.

Again, yielding to what the client wants may be the easy road, but it will undermine the powerful contributions that system dynamics can make.

This is true in so many ways. The client often wants too much detail, or too many scenarios, or too many exogenous influences. Any of these can obstruct learning, or break the budget.

These pages are full of data-free conceptual models that I think are valuable. But I also love data, so I have a different bottom line:

- Data and calibration by themselves can’t make the model worse – you’re adding additional information to the testing process, which is good.

- However, time devoted to data and calibration has an opportunity cost, which can be very high. So, you have to weigh time spent on the data against time spent on communication, theory development, robustness testing, scenario exploration, sensitivity analysis, etc.

- That time spent on data is not all wasted, because it’s a good excuse to talk to people about the system, may reveal features that no one suspected, and can contribute to storytelling about the solution later.

- Data is also a useful complement to talking to people about the system. Managers say they’re doing X. Are they really doing Y? Such cases may be revealed by structural problems, but calibration gives you a sharper lens for detecting them.

- If the model doesn’t fit the data, it might be the data that is wrong or misinterpreted, and this may be an important insight about a measurement system that’s driving the system in the wrong direction.

- If you can’t reproduce history, you have some explaining to do. You may be able to convince yourself that the model behavior replicates the essence of the problem, superimposed on some useless noise that you’d rather not reproduce. Can you convince others of this?

There are no decision makers…

A little gem from Jay Forrester:

One hears repeatedly the question of how we in system dynamics might reach “decision makers.” With respect to the important questions, there are no decision makers. Those at the top of a hierarchy only appear to have influence. They can act on small questions and small deviations from current practice, but they are subservient to the constituencies that support them. This is true in both government and in corporations. The big issues cannot be dealt with in the realm of small decisions. If you want to nudge a small change in government, you can apply systems thinking logic, or draw a few causal loop diagrams, or hire a lobbyist, or bribe the right people. However, solutions to the most important sources of social discontent require reversing cherished policies that are causing the trouble. There are no decision makers with the power and courage to reverse ingrained policies that would be directly contrary to public expectations. Before one can hope to influence government, one must build the public constituency to support policy reversals.

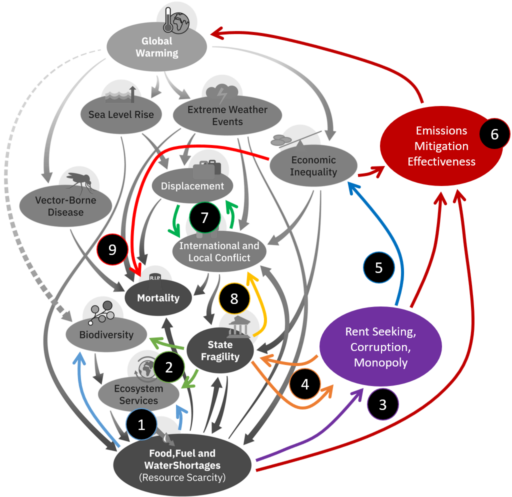

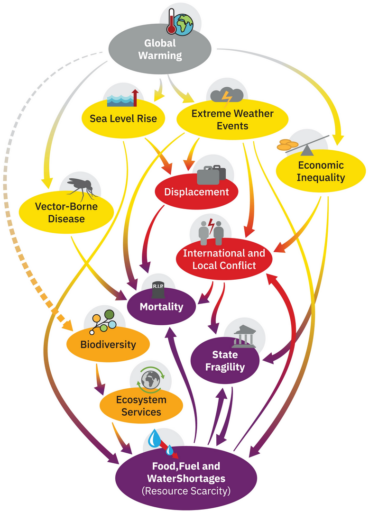

Climate Catastrophe Loops

PNAS has a new article on climate catastrophe mechanisms, focused on the social side, not natural tipping points. The article includes a causal loop diagram capturing some of the key feedbacks:

The diagram makes an unconventional choice: link polarity is denoted by dashed lines, rather than the usual + and – designations at arrowheads. Per the caption,

The diagram makes an unconventional choice: link polarity is denoted by dashed lines, rather than the usual + and – designations at arrowheads. Per the caption,

This is a causal loop diagram, in which a complete line represents a positive polarity (e.g., amplifying feedback; not necessarily positive in a normative sense) and a dotted line denotes a negative polarity (meaning a dampening feedback).

Does this new convention work? I don’t think so. It’s not less visually cluttered, and it makes negative links look tentative, though in fact there’s no reason for a negative link to have any less influence than a positive one. I think it makes it harder to assess loop polarity by following reversals from – links. There’s at least one goof: increasing ecosystem services should decrease food and water shortages, so that link should have negative polarity.

The caption also confuses link and loop polarity: “a complete line represents a positive polarity (e.g., amplifying feedback”. A single line is a causal link, not a loop, and therefore doesn’t represent feedback at all. (The rare exception might be a variable with a link back to itself, sometimes used to indicate self-reinforcement without elaborating on the mechanism.)

Nevertheless, I think this is a useful start toward a map of the territory. For me, it was generative, i.e. it immediately suggested a lot of related effects. I’ve elaborated on the original here:

- Food, fuel and water shortages increase pressure to consume more natural resources (biofuels, ag land, fishing for example) and therefore degrade biodiversity and ecosystem services. (These are negative links, but I’m not following the dash convention – I’m leaving polarity unlabeled for simplicity.) This is perverse, because it creates reinforcing loops worsening the resource situation.

- State fragility weakens protections that would otherwise protect natural resources against degradation.

- Fear of scarcity induces the wealthy to protect their remaining resources through rent seeking, corruption and monopoly.

- Corruption increases state fragility, and fragile states are less able to defend against further corruption.

- More rent seeking, corruption and monopoly increases economic inequality.

- Inequality, rent seeking, corruption, and scarcity all make emissions mitigation harder, eventually worsening warming.

- Displacement breeds conflict, and conflict displaces people.

- State fragility breeds conflict, as demagogues blame “the other” for problems and nonviolent conflict resolution methods are less available.

- Economic inequality increases mortality, because mortality is an extreme outcome, and inequality puts more people in the vulnerable tail of the distribution.

#6 is key, because it makes it clear that warming is endogenous. Without it, the other variables represent a climate-induced cascade of effects. In reality, I think we’re already seeing many of the tipping effects (resource and corruption effects on state fragility, for example) and the resulting governance problems are a primary cause of the failure to reduce emissions.

I’m sure I’ve missed a bunch of links, but this is already a case of John Muir‘s idea, “When we try to pick out anything by itself, we find it hitched to everything else in the Universe.”

Unfortunately, most of the hitches here create reinforcing loops, which can amplify our predicament and cause catastrophic tipping events. I prefer to see this as an opportunity: we can run these vicious cycles in reverse, making them virtuous. Fighting corruption makes states less fragile, making mitigation more successful, reducing future warming and the cascade of side effects that would otherwise reinforce state fragility in the future. Corruption is just one of many places to start, and any progress is amplified. It’s just up to us to cross enough virtuous tipping points to get the whole system moving in a good direction.

Grand Challenges for Socioeconomic Systems Modeling

Following my big tent query, I was reexamining Axtell’s critique of SD aggregation and my response. My opinion hasn’t changed much: I still think Axtell’s critique of aggregation is very useful, albeit directed at a straw dog vision of SD that doesn’t exist, and that building bridges remains important.

As I was attempting to relocate the critique document, I ran across this nice article on Eight grand challenges in socio-environmental systems modeling.

Modeling is essential to characterize and explore complex societal and environmental issues in systematic and collaborative ways. Socio-environmental systems (SES) modeling integrates knowledge and perspectives into conceptual and computational tools that explicitly recognize how human decisions affect the environment. Depending on the modeling purpose, many SES modelers also realize that involvement of stakeholders and experts is fundamental to support social learning and decision-making processes for achieving improved environmental and social outcomes. The contribution of this paper lies in identifying and formulating grand challenges that need to be overcome to accelerate the development and adaptation of SES modeling. Eight challenges are delineated: bridging epistemologies across disciplines; multi-dimensional uncertainty assessment and management; scales and scaling issues; combining qualitative and quantitative methods and data; furthering the adoption and impacts of SES modeling on policy; capturing structural changes; representing human dimensions in SES; and leveraging new data types and sources. These challenges limit our ability to effectively use SES modeling to provide the knowledge and information essential for supporting decision making. Whereas some of these challenges are not unique to SES modeling and may be pervasive in other scientific fields, they still act as barriers as well as research opportunities for the SES modeling community. For each challenge, we outline basic steps that can be taken to surmount the underpinning barriers. Thus, the paper identifies priority research areas in SES modeling, chiefly related to progressing modeling products, processes and practices.

Elsawah et al., 2020

The findings are nicely summarized in Figure 1:

Not surprisingly, item #1 is … building bridges. This is why I’m more of a “big tent” guy. Is systems thinking a subset of system dynamics, or is system dynamics a subset of systems thinking? I think the appropriate answer is, “who cares?” Such disciplinary fence-building is occasionally informative, but more often needlessly divisive and useless for solving real-world problems.

It’s interesting to contrast this with George Richardson’s list for SD:

The potential pitfalls of our current successes suggest the time is right to sketch a view of outstanding problems in the field of system dynamics, to focus the attention of people in the field on especially promising or especially problematic issues. …

Understanding model behavior

Accumulating wise practice

Advancing practice

Accumulating results

Making models accessible

Qualitative mapping and formal modeling

Widening the base

Confidence and validationProblems for the Future of System Dynamics

George P. Richardson

The contrasts here are interesting. Elsewah et al. are more interested in multiscale phenomena, data, uncertainty and systemic change (#5, which I think means autopoeisis, not merely change over time). I think these are all important and perhaps underappreciated priorities for the future of SD as well. Richardson on the other hand is more interested in validation and understanding of models, making progress cumulative, and widening participation in several ways.

More importantly, I think there’s really a lot of overlap – in fact I don’t think either party would disagree with anything on the other’s list. In particular, both support mixed qualitative and computational methods and increasing the influence of models.

I think Forrester’s view on influence is illuminating:

One hears repeatedly the question of how we in system dynamics might reach “decision makers.” With respect to the important questions, there are no decision makers. Those at the top of a hierarchy only appear to have influence. They can act on small questions and small deviations from current practice, but they are subservient to the constituencies that support them. This is true in both government and in corporations. The big issues cannot be dealt with in the realm of small decisions. If you want to nudge a small change in government, you can apply systems thinking logic, or draw a few causal loop diagrams, or hire a lobbyist, or bribe the right people. However, solutions to the most important sources of social discontent require reversing cherished policies that are causing the trouble. There are no decision makers with the power and courage to reverse ingrained policies that would be directly contrary to public expectations. Before one can hope to influence government, one must build the public constituency to support policy reversals.

System Dynamics—the Next Fifty Years

Jay W. Forrester

This neatly explains Forrester’s emphasis on education as a prerequisite for change. Richardson may agree, because this is essentially “widening the base” and “making models accessible”. My first impression was that Elsawah et al. were taking more of a “modeling priesthood” view of things, but in the end they write:

New kinds of interactive interfaces are also needed to help stakeholders access models, be it to make sense of simulation results (e.g. through monetization of values or other forms of impact representation), to shape assumptions and inputs in model development and scenario building, and to actively negotiate around inevitable conflicts and tradeoffs. The role of stakeholders should be much more expansive than a passive from experts, and rather is a co-creator of models, knowledge and solutions.

Where I sit in post-covid America, with atavistic desires for simpler times that never existed looming large in politics, broadening the base for model participation seems more important than ever. It’s just a bit daunting to compare the long time constant on learning with the short fuse on some of the big problems we hope these grand challenges will solve.

Should System Dynamics Have a Big Tent or Narrow Focus?

In a breakout in the student colloquium at ISDC 2022, we discussed the difficulty of getting a paper accepted into the conference, where the content was substantially a discrete event or agent simulation. Readers may know that I’m not automatically a fan of discrete models. Discrete time stinks. However, I think “discreteness” itself is not the enemy – it’s just that the way people approach some discrete models is bad, and continuous is often a good way to start.

On the flip side, there are certainly cases in which it’s sensible to start with a more granular, detailed model. In fact there are cases in which nonlinearity makes correct aggregation impossible in principle. This may not require going all the way to a discrete, agent model, but I think there’s a compelling case for the existence of systems in which the only good model is not a classic continuous time, aggregate, continuous value model. In between, there are also cases in which it may be practical to aggregate, but you don’t know how to do it a priori. In such cases, it’s useful to compare aggregate models with underlying detailed models to see what the aggregation rules should be, and to know where they break down.

I guess this is a long way of saying that I favor a “big tent” interpretation of System Dynamics. We should be considering models broadly, with the goal of understanding complex systems irrespective of methodological limits. We should go where operational thinking takes us, even if it’s not continuous.

This doesn’t mean that everything is System Dynamics. I think there are lots of things that should generally be excluded. In particular, anything that lacks dynamics – at a minimum pure stock accumulation, but usually also feedback – doesn’t make the cut. While I think that good SD is almost always at the intersection of behavior and physics, we sometimes have nonbehavioral models at the conference, i.e. models that lack humans, and that’s OK because there are some interesting opportunities for cross-fertilization. But I would exclude models that address human phenomena, but with the kind of delusional behavioral model that you get when you assume perfect information, as in much of economics.

I think a more difficult question is, where should we draw the line between System Dynamics and model-free Systems Thinking? I think we do want some model-free work, because it’s the gateway drug, and often influential. But it’s also high risk, in the sense that it may involve drawing conclusions about behavior from complex maps, where we’ve known from the beginning that no one can intuitively solve a 10th order system. I think preserving the core of the SD genome, that conclusions should emerge from replicable, transparent, realistic simulations, is absolutely essential.

Related:

Finding SD conference papers

How to search the System Dynamics conference proceedings, and other places to find SD papers.

There’s been a lot of turbulence in the SD society web organization, which is greatly improved. One side effect is that conference proceedings have moved. The conference proceedings page now points to a dedicated subdomain.

If you want to do a directed search of the proceedings for papers on a particular topic, the google search syntax is now:

site:proceedings.systemdynamics.org topic

where ‘topic’ should be replaced by your terms of interest, as in

site:proceedings.systemdynamics.org stock flow

(This post was originally published in Oct. 2012; obsolete approaches have been removed for simplicity.)

Other places to look for papers include the System Dynamics Review and Google Scholar.