Long ago, in the MIT SD PhD seminar, a group of us replicated and critiqued a number of classic models. Some of those formed the basis for my model library. Around that time, Liz Keating wrote a nice summary of “How to Critique a Model.” That used to be on my web site in the mid-90s, but I lost track of it. I haven’t seen an adequate alternative, so I recently tracked down a copy. Here it is: SD Model Critique (thanks, Liz). I highly recommend a look, especially with the SD conference paper submission deadline looming.

Category: SystemDynamics

The Health Care Death Spiral

Paul Krugman documents an ongoing health care death spiral in California:

Here’s the story: About 800,000 people in California who buy insurance on the individual market — as opposed to getting it through their employers — are covered by Anthem Blue Cross, a WellPoint subsidiary. These are the people who were recently told to expect dramatic rate increases, in some cases as high as 39 percent.

Why the huge increase? It’s not profiteering, says WellPoint, which claims instead (without using the term) that it’s facing a classic insurance death spiral.

Bear in mind that private health insurance only works if insurers can sell policies to both sick and healthy customers. If too many healthy people decide that they’d rather take their chances and remain uninsured, the risk pool deteriorates, forcing insurers to raise premiums. This, in turn, leads more healthy people to drop coverage, worsening the risk pool even further, and so on.

A death spiral arises when a positive feedback loop runs as a vicious cycle. Another example is Andy Ford’s utility death spiral. The existence of the positive feedback leads to counter-intuitive policy prescriptions: Continue reading “The Health Care Death Spiral”

The Dynamics of Science

First, check out SEED’s recent article, which asks, When it comes to scientific publishing and fame, the rich get richer and the poor get poorer. How can we break this feedback loop?

For to all those who have, more will be given, and they will have an abundance; but from those who have nothing, even what they have will be taken away.

—Matthew 25:29

Author John Wilbanks proposes to use richer metrics to evaluate scientists, going beyond publications to consider data, code, etc. That’s a good idea per se, but it’s a static solution to a dynamic problem. It seems to me that it spreads around the effects of the positive feedback from publications->resources->publications a little more broadly, but doesn’t necessarily change the gain of the loop. A better solution, if meritocracy is the goal, might be greater use of blind evaluation and changes to allocation mechanisms themselves.

The reason we care about this is that we’d like science to progress as quickly as possible. That involves crafting a reward system with some positive feedback, but not so much that it easily locks in to suboptimal paths. That’s partly a matter of the individual researcher, but there’s a larger question: how to ensure that good theories out-compete bad ones?

Now check out the work of John Sterman and Jason Wittenberg on Kuhnian scientific revolutions.

Update: also check out filter bubbles.

(Dry) Lake Mead

I’m just back from two weeks camping in the desert. Ironically, we had a lot of rain. Apart from the annoyance of cooking in the rain, water in the desert is a wonderful sight.

We spent one night in transit at Las Vegas Bay campground on Lake Mead. We were surprised to discover that it’s not a bay anymore – it’s a wash. The lake has been declining for a decade and is now 100 feet below its maximum.

It turned out that this is not unprecedented – it happened in 1965, for example. After that relatively brief drought, it took a decade to claw back to “normal” levels.

The recent decline looks different to me, though – it’s not a surprising, abrupt decline, it’s a long, slow ramp, suggesting a persistent supply-demand imbalance. Bizarrely, it’s easy to get lake level data, but hard to find a coherent set of basin flow measurements. Would you invest in a company with a dwindling balance sheet, if they couldn’t provide you with an income statement?

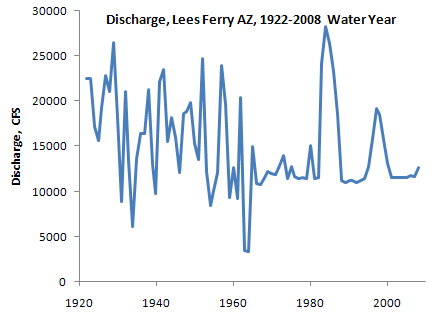

It appears to me that the Colorado River system is simply overallocated, and their hasn’t been any feedback between reality (actual water availability) and policy (water use, governed by the Law of the River). It also appears that the problem is not with the inflow to Lake Mead. Here’s discharge past the Lees Ferry guage, which accounts for the bulk of the lake’s supply:

Notice that the post-2000 flows are low (probably reflecting mainly the statutory required discharge from Glenn Canyon dam upstream), but hardly unprecedented. My hypothesis is that the de facto policy for managing water levels is to wait for good years to restore the excess withdrawals of bad years, and that demand management measures in the interim are toothless. That worked back when river flows were not fully subscribed. The trouble is, supply isn’t stationary, and there’s no reason to assume that it will return to levels that prevailed in the early years of river compacts. At the same time, demand isn’t stationary either, as population growth in the west drives it up. To avoid Lake Mead drying up, the system is going to have to get a spine, i.e. there’s going to have to be some feedback between water availability and demand.

I’m sure there’s a much deeper understanding of water dynamics among various managers of the Colorado basin than I’ve presented here. But if there is, they’re certainly not sharing it very effectively, because it’s hard for an informed tinkerer like me to get the big picture. Colorado basin managers should heed Krys Stave’s advice:

Water managers increasingly are faced with the challenge of building public or stakeholder support for resource management strategies. Building support requires raising stakeholder awareness of resource problems and understanding about the consequences of different policy options.

Hadley cells for lunch

At lunch today we were amazed by these near-perfect convection cells that formed in a pot of quinoa. You can DIY at NOAA. I think this is an instance of Benard-Marangoni convection, because the surface is free, though the thinness assumptions are likely violated, and quinoa is not quite an ideal liquid. Anyway, it’s an interesting phenomenon because the dynamics involve a surface tension gradient, not just heat transfer. See this and this.

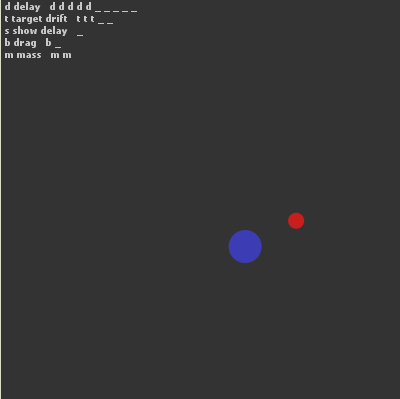

Fun with Processing

Processing is a very clean, Java-based environment targeted at information visualization and art. I got curious about it, so I built a simple interactive game that demonstrates how dynamic complexity makes system control difficult. Click through to play:

I think there’s a lot of potential for elegant presentation with Processing. There are several physics libraries and many simulations with a physical, chemical, or mathematical basis at OpenProcessing.org:

If you like code, it’s definitely worth a look.

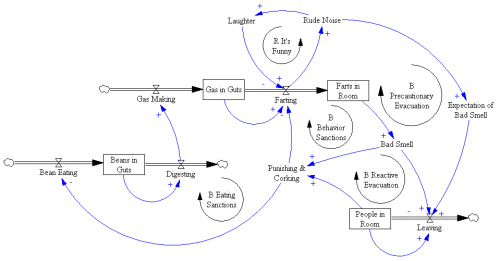

Dynamics of … er … flatulence

I sat down over lunch to develop a stock-flow diagram with my kids. This is what happens when you teach system dynamics to young boys:

Notice that there’s no outflow for the unpleasantries, because they couldn’t agree on whether the uptake mechanism was chemical reaction or physical transport.

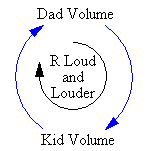

Along the way, we made a process observation. We started off quiet, but gradually talked louder and louder until we were practically shouting at each other. The boys were quick to identify the dynamic:

Jay Forrester always advocates tackling the biggest problems, because they’re no harder to solve than trivial ones, but sometimes it’s refreshing to lighten up and take on systems of limited importance.

The side effects of parachuting cats

I ran across a nice factual account of the fantastic “cat drop” story of ecological side effects immortalized in Alan Atkisson’s song.

It reminded me of another great account of the complex side effects of ecosystem disturbance, from the NYT last year, supplemented with a bit of wikipedia:

- In 1878, rabbits were introduced to Macquarie Island

- Also in the 19th century, mice were inadvertently introduced

- Cats were subsequently introduced, to reduce mouse depredation of supplies stored on the island

- The rabbits multiplied, as they do

- In 1968, myxoma virus was released to control rabbits, successfully decimating them

- The now-hungry cats turned to the seabird population for food

- A successful campaign eradicated the cats in 1985

- The rabbits, now predator-free, rebounded explosively

- Rabbit browsing drastically changed vegetation; vegetation changes caused soil instability, wiping out seabird nesting sites

- Other rodents also rebounded, turning to seabird chicks for food

An expensive pan-rodent eradication plan is now underway.

But this time, administrators are prepared to make course corrections if things do not turn out according to plan.“This study clearly demonstrates that when you’re doing a removal effort, you don’t know exactly what the outcome will be,” said Barry Rice, an invasive species specialist at the Nature Conservancy. “You can’t just go in and make a single surgical strike. Every kind of management you do is going to cause some damage.”

The Seven Deadly Sins of Managing Complex Systems

I was rereading the Fifth Discipline on the way to Boston the other day, and something got me started on this. Wrath, greed, sloth, pride, lust, envy, and gluttony are the downfall of individuals, but what about the downfall of systems? Here’s my list, in no particular order:

- Information pollution. Sometimes known as lying, but also common in milder forms, such as greenwash. Example: twenty years ago, the “recycled” symbol was redefined to mean “recyclable” – a big dilution of meaning.

- Elimination of diversity. Example: overconsolidation of industries (finance, telecom, …). As Jay Forrester reportedly said, “free trade is a mechanism for allowing all regions to reach all limits at once.”

- Changing the top-level rules in pursuit of personal gain. Example: the Starpower game. As long as we pretend to want to maximize welfare in some broad sense, the system rules need to provide an equitable framework, within which individuals can pursue self-interest.

- Certainty. Planning for it leads to fragile strategies. If you can’t imagine a way you could be wrong, you’re probably a fanatic.

- Elimination of slack. Normally this is regarded as a form of optimization, but a system without any slack can’t change (except catastrophically). How are teachers supposed to improve their teaching when every minute is filled with requirements?

- Superstition. Attribution of cause by correlation or coincidence, including misapplied pattern-matching.

- The four horsemen from classic SD work on flawed mental models: linear, static, open-loop, laundry-list thinking.

That’s seven (cheating a little). But I think there are more candidates that don’t quite make the big time:

- Impatience. Don’t just do something, stand there. Sometimes.

- Failure to account for delays.

- Abstention from top-level decision making (essentially not voting).

The very idea of compiling such a list only makes sense if we’re talking about the downfall of human systems, or systems managed for the benefit of “us” in some loose sense, but perhaps anthropocentrism is a sin in itself.

I’m sure others can think of more! I’d be interested to hear about them in comments.

Fit to data, good or evil?

The following is another extended excerpt from Jim Thompson and Jim Hines’ work on financial guarantee programs. The motivation was a client request for comparison of modeling results to data. The report pushes back a little, explaining some important limitations of model-data comparisons (though it ultimately also fulfills the request). I have a slightly different perspective, which I’ll try to indicate with some comments, but on the whole I find this to be an insightful and provocative essay.

First and Foremost, we do not want to give credence to the erroneous belief that good models match historical time series and bad models don’t. Second, we do not want to over-emphasize the importance of modeling to the process which we have undertaken, nor to imply that modeling is an end-product.

In this report we indicate why a good match between simulated and historical time series is not always important or interesting and how it can be misleading Note we are talking about comparing model output and historical time series. We do not address the separate issue of the use of data in creating computer model. In fact, we made heavy use of data in constructing our model and interpreting the output — including first hand experience, interviews, written descriptions, and time series.

This is a key point. Models that don’t report fit to data are often accused of not using any. In fact, fit to numerical data is only one of a number of tests of model quality that can be performed. Alone, it’s rather weak. In a consulting engagement, I once ran across a marketing science model that yielded a spectacular fit of sales volume against data, given advertising, price, holidays, and other inputs – R^2 of .95 or so. It turns out that the model was a linear regression, with a “seasonality” parameter for every week. Because there were only 3 years of data, those 52 parameters were largely responsible for the good fit (R^2 fell to < .7 if they were omitted). The underlying model was a linear regression that failed all kinds of reality checks.