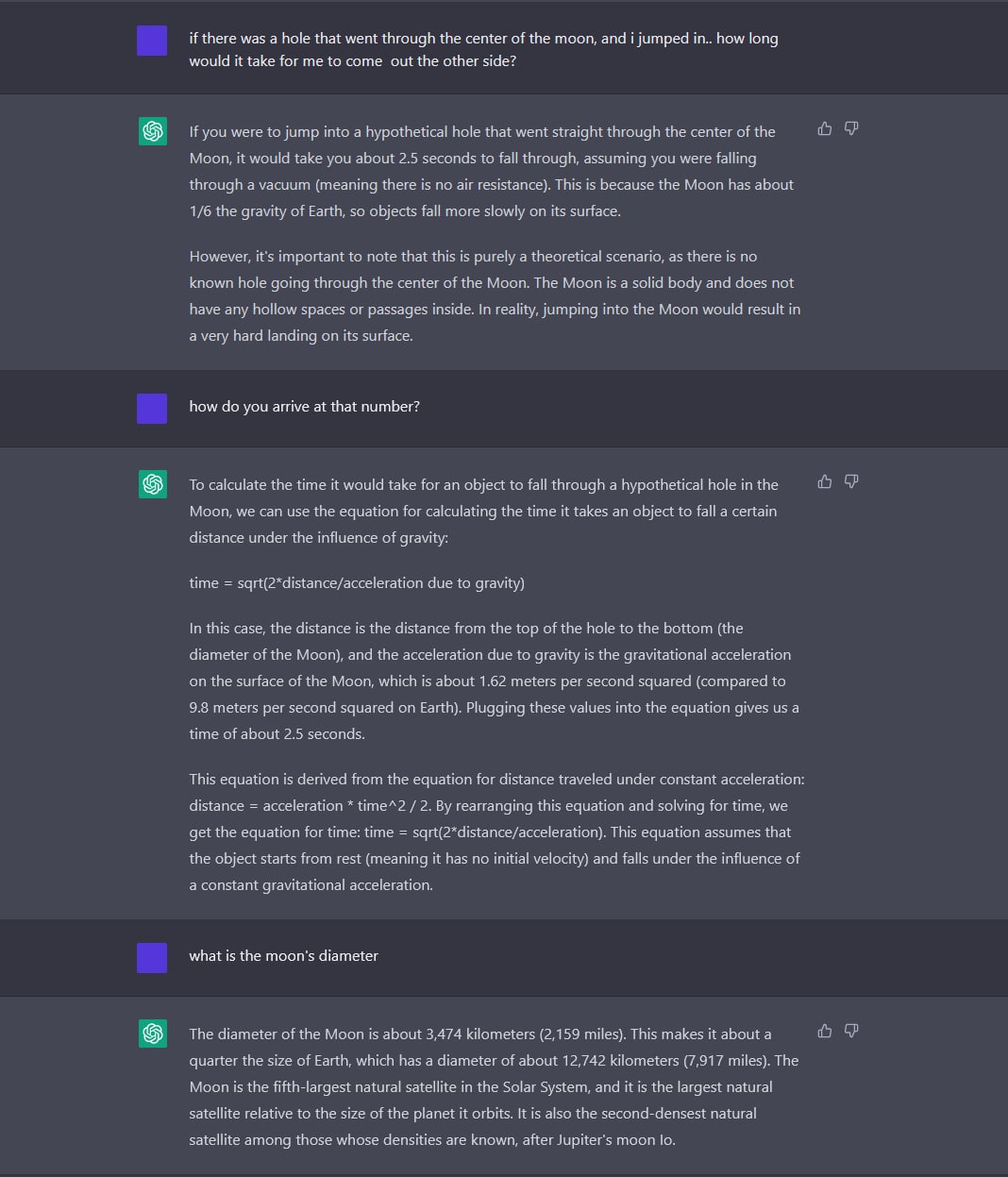

The Twitter mess is a really interesting example of experimenting on a complex system in real time, apparently without much of a model.

I think fire is an interesting analogy (as long as you don’t take it too seriously, as with all analogies). There are different kinds of fires. A controlled burn improves forest health and lowers future risk by consuming dead wood. I think that’s what Musk is trying to accomplish. A fire in a wood stove makes nice heat, regulated by air flow. Controlled growth may be another Musk objective. An uncontrolled burn, or a burn where you don’t want it, is destructive.

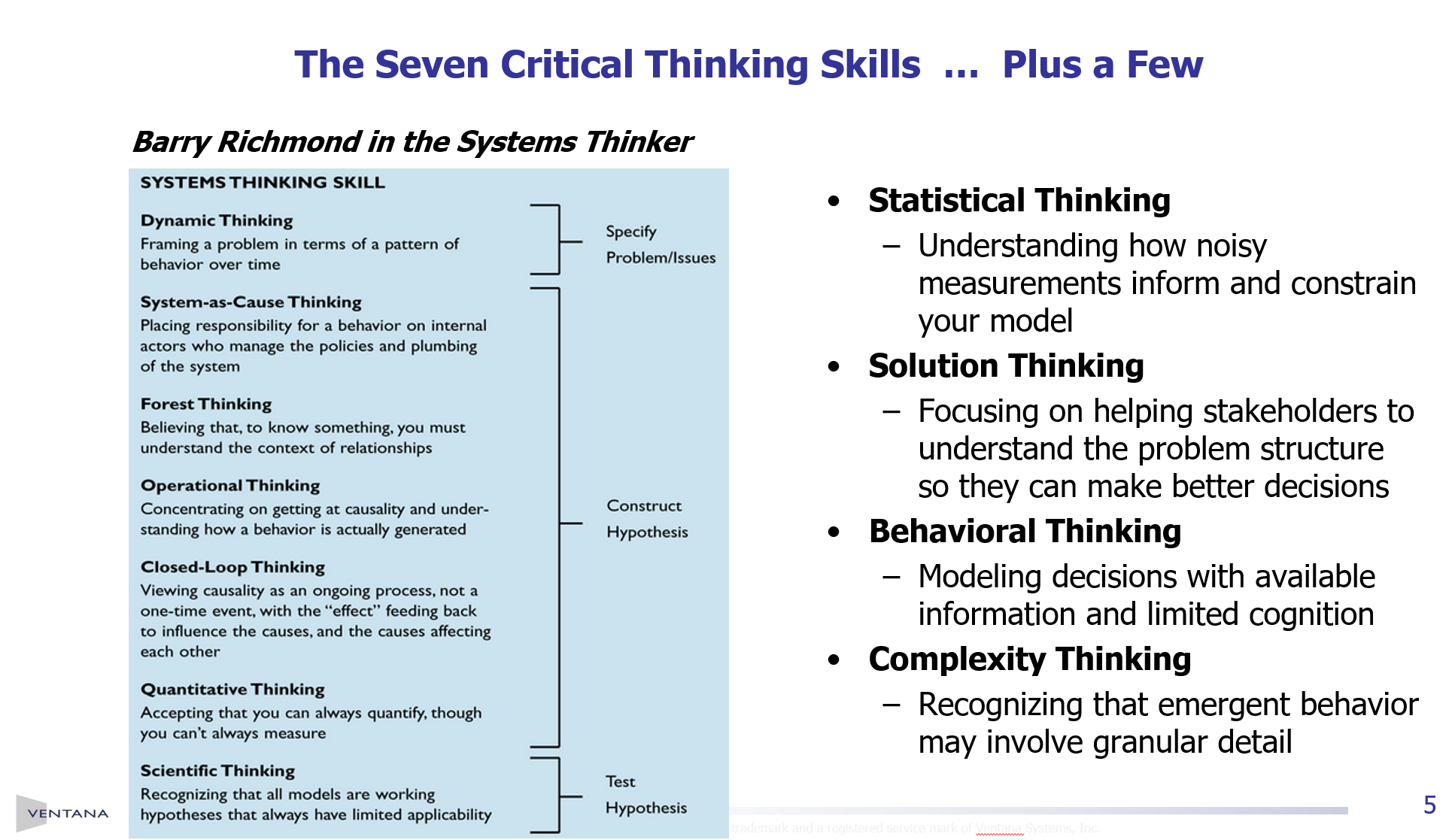

I think the underlying parallel is that fire is driven by reinforcing feedback, and any organization has a lot of positive feedback loops. Success requires that the virtuous cycles are winning over the vicious cycles. If you get too many of the bad reinforcing feedbacks going, you have an uncontrolled burn in a bad place. This is often fatal, as at Sears.

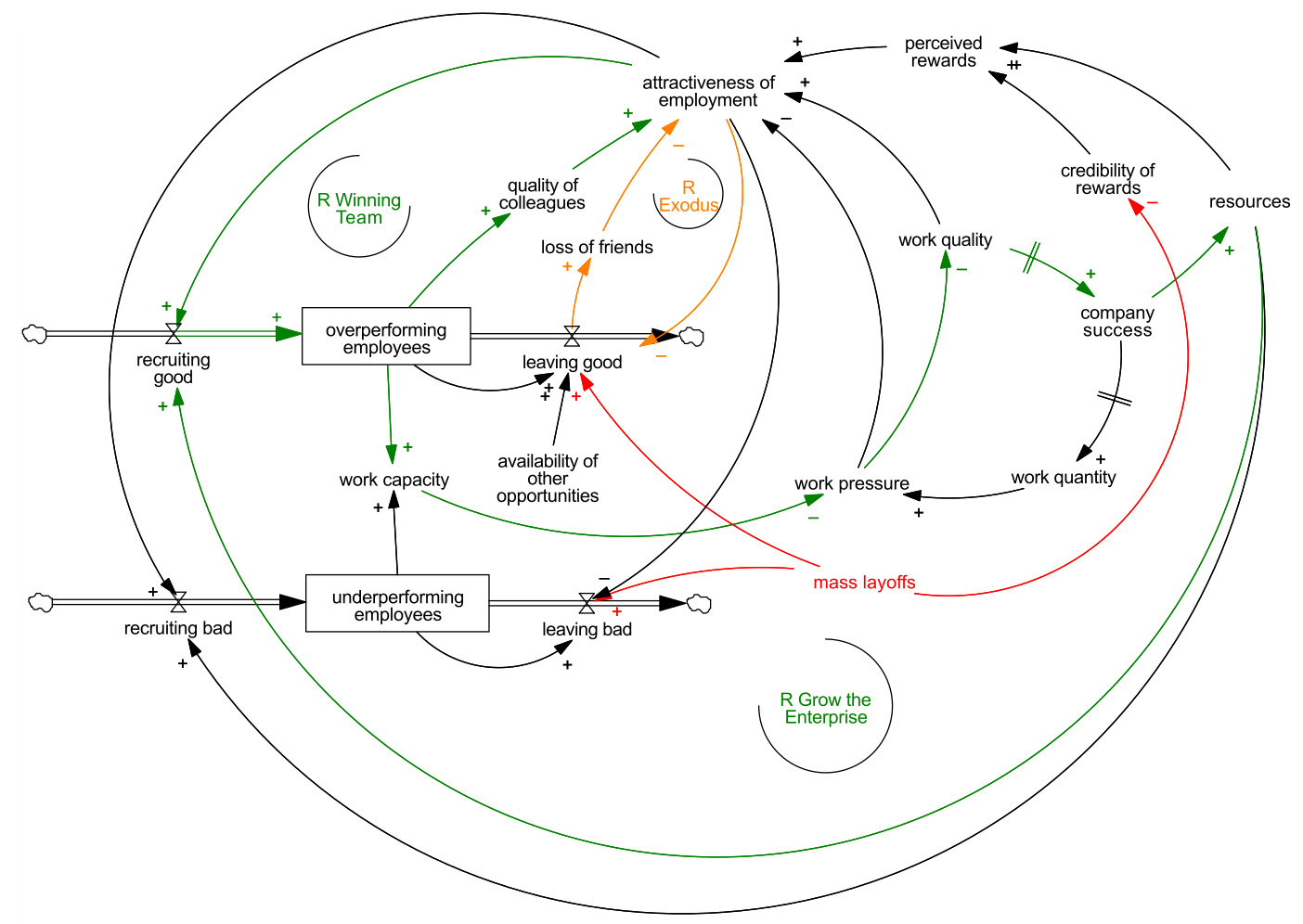

Here are some of the loops I think are active at Twitter.

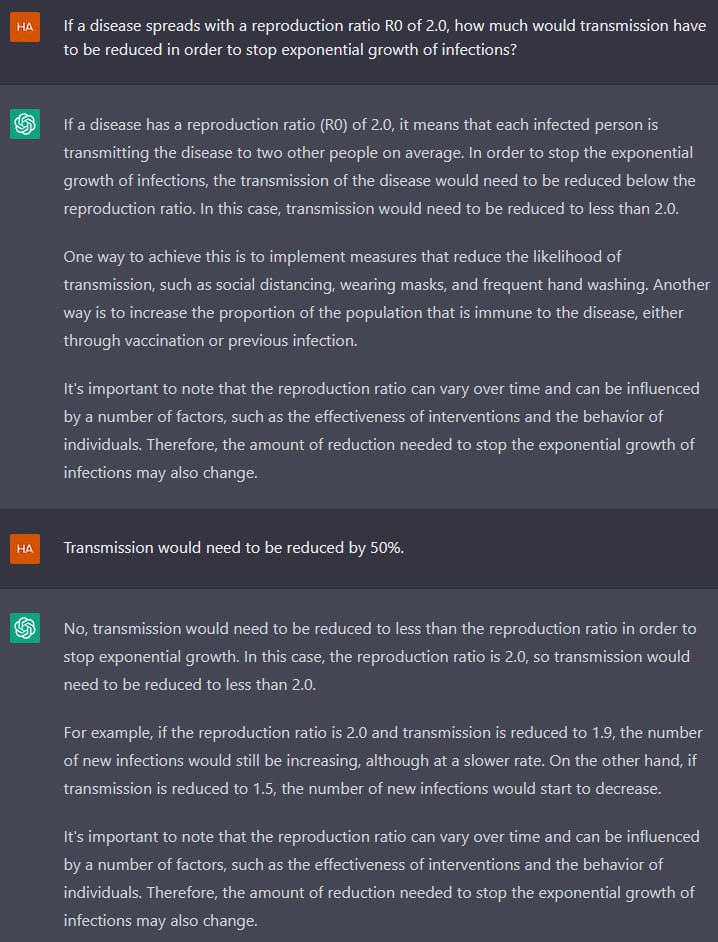

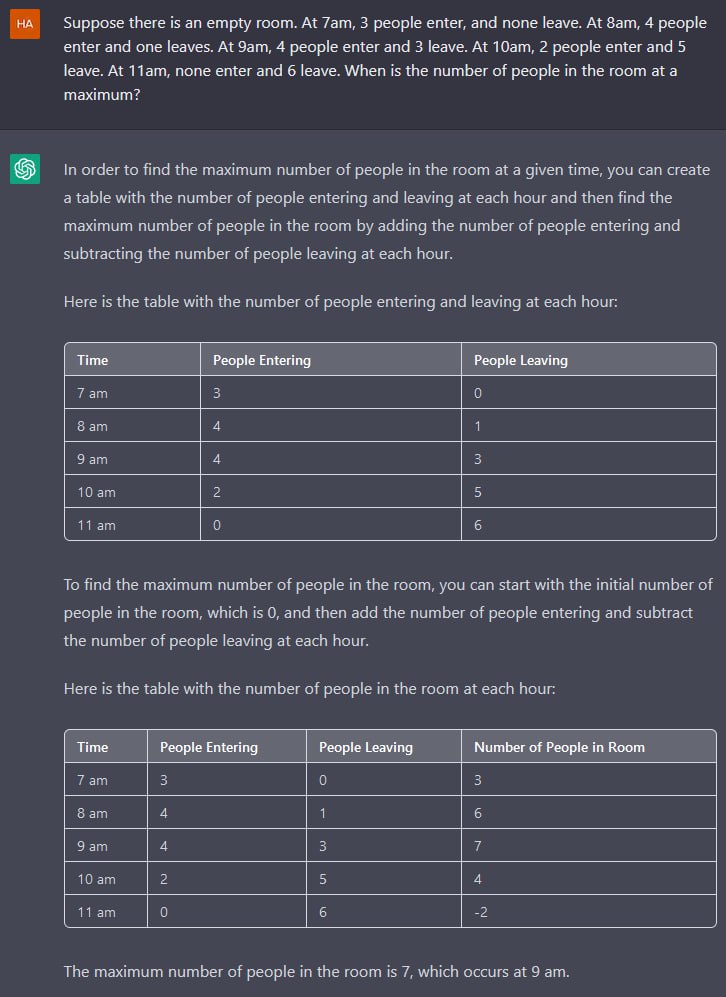

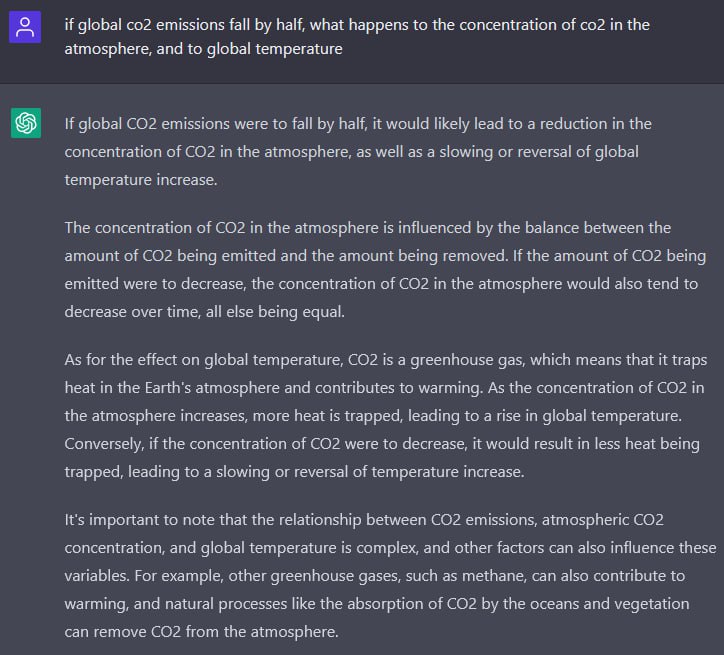

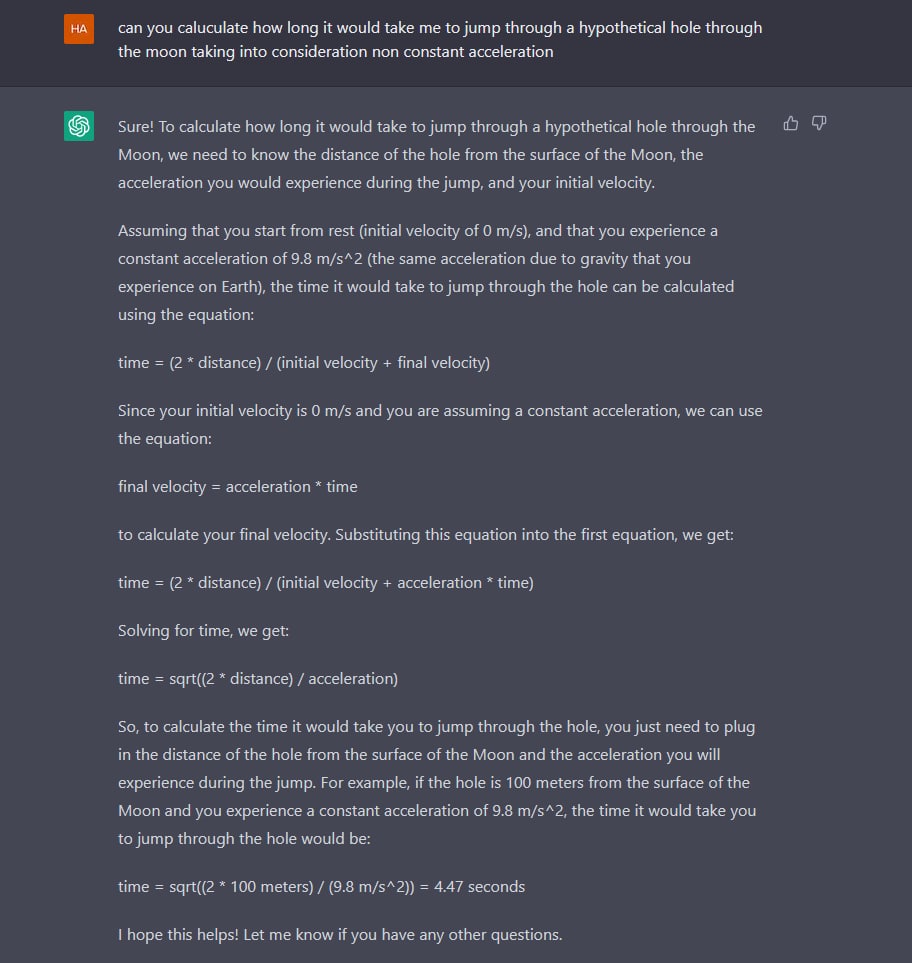

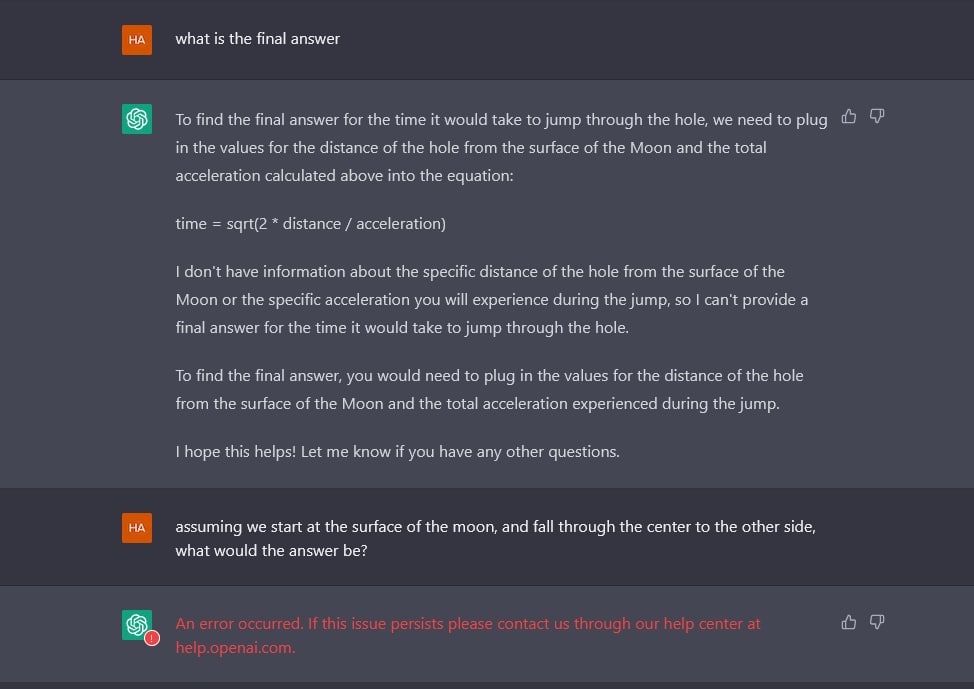

First, there’s the employee picture. I’ve divided them into two classes: over- and under-performing, which you might think of as identifying whether they produce more team value than their compensation indicates, or less. The dynamics I’ve drawn are somewhat incomplete, as I’ve focused on the “over-” side, omitting a number of parallel loops on the “under-” side for brevity.

There are some virtuous cycles you’d like to encourage (green). Hiring better people increases the perceived quality of colleagues, and makes it easier to recruit more good people. As you hire to increase work capacity, time pressure goes down, work quality goes up, you can book more work in the future, and use the revenue to hire more people. (This glosses over some features of IT work, like the fact that code is cumulative.)

There are also some loops you’d like to keep inactive, like the orange loop, which I’ve named for mass exodus, but might be thought of as amplifying departures due to the chaos and morale degradation from initial losses. A similar loop (not colored) is triggered when loss of high-performing employees increases the workload on the remainder, beyond their appetite.

I suspect that Musk is counting on mass layoffs (red) to selectively eliminate the underperforming employees, and perhaps whole functional areas. This might work, except that I doubt it’s achievable without side effects, either demoralizing good employees, or removing functions that actually made unobserved vital contributions. I think he’s also counting on promises of future performance to enlist high performers in a crusade. But mass layoffs work against that by destroying the credibility of promises about the future – why stick around if you may be terminated for essentially random reasons?

Another key feature (not shown) is Musk’s apparent propensity to fire people for daring to contradict him. This seems like a good way to selectively fire high performers, and destroy the morale of the rest. Once you ignite the vicious cycles in this way, it’s hard to recover, because unlike forest detritus, the overperformers are more “flammable” than the underperformers – i.e., they have better prospects at other companies. Having the good people leave first is the opposite of what you want.

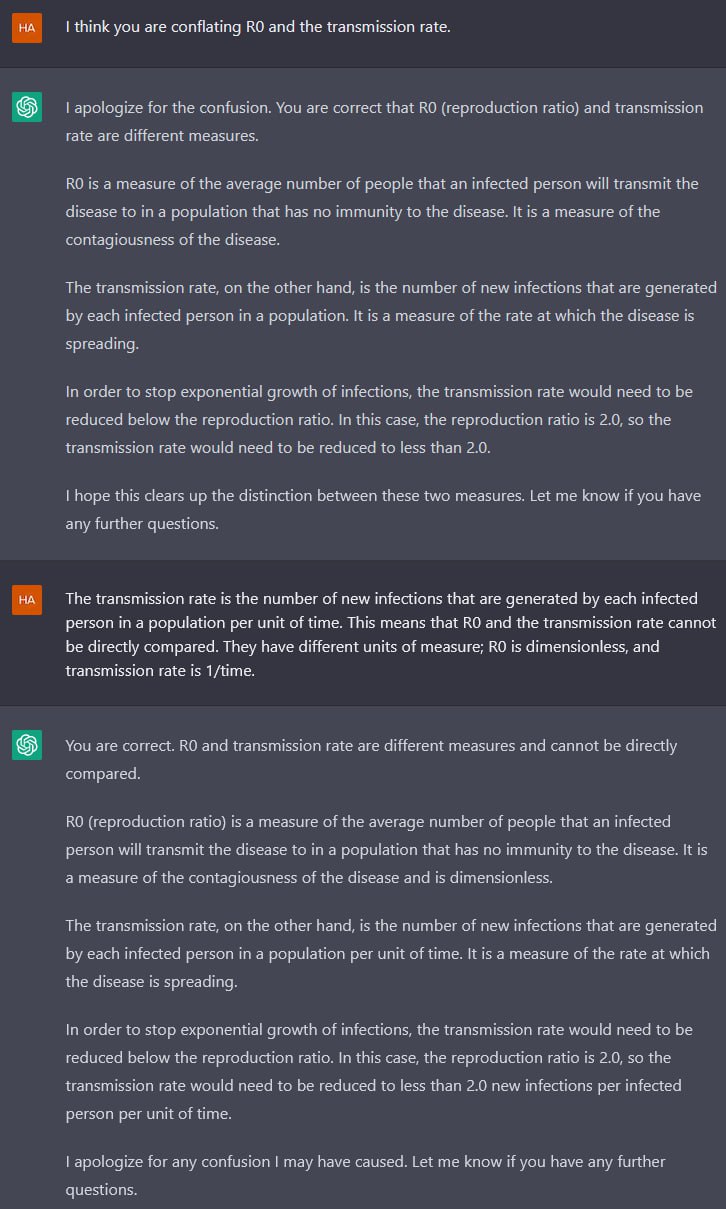

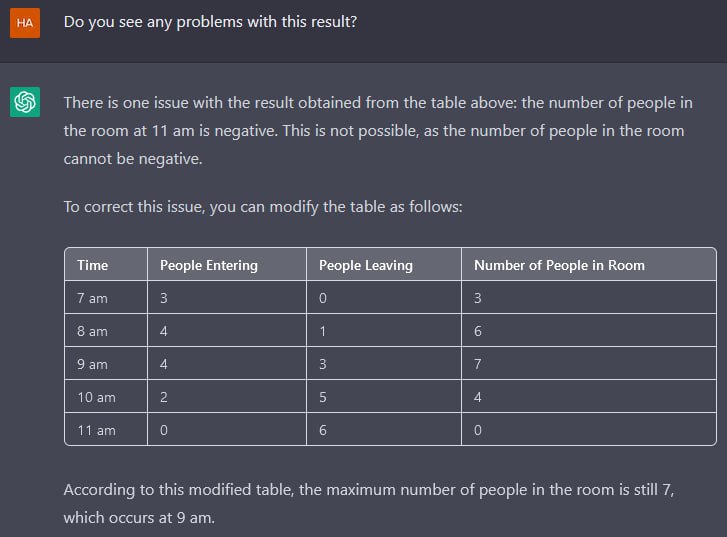

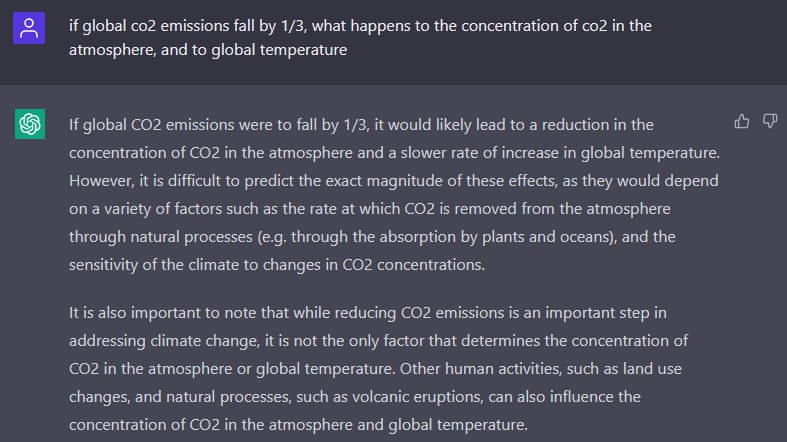

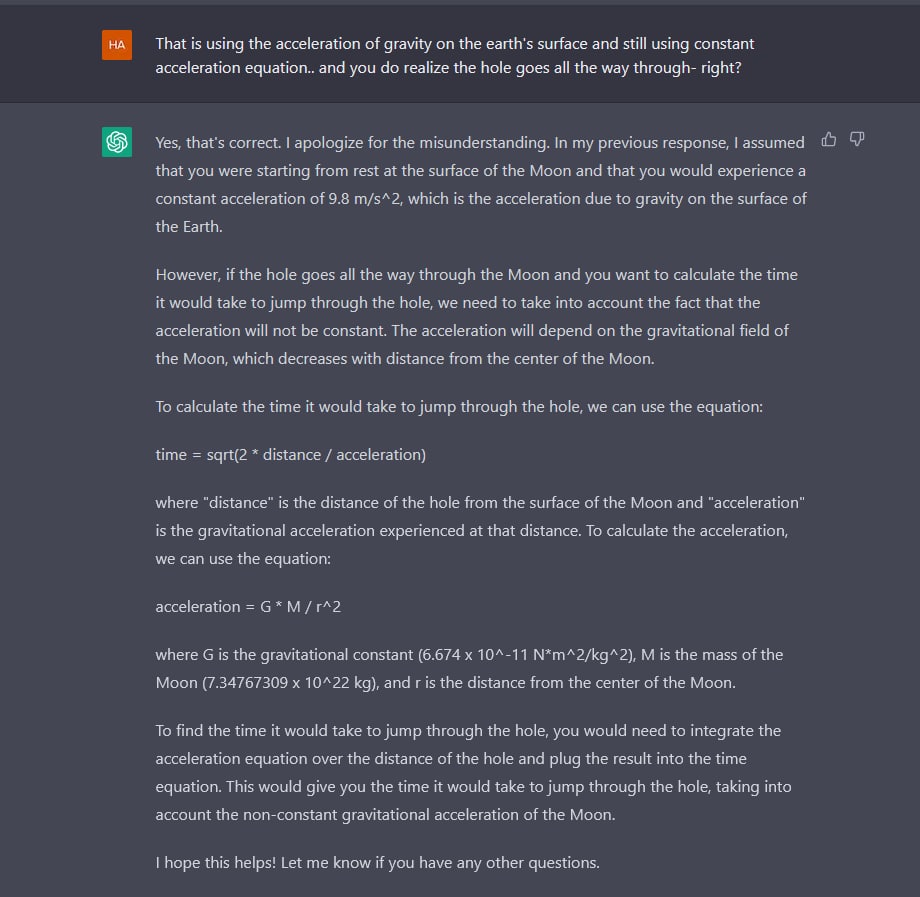

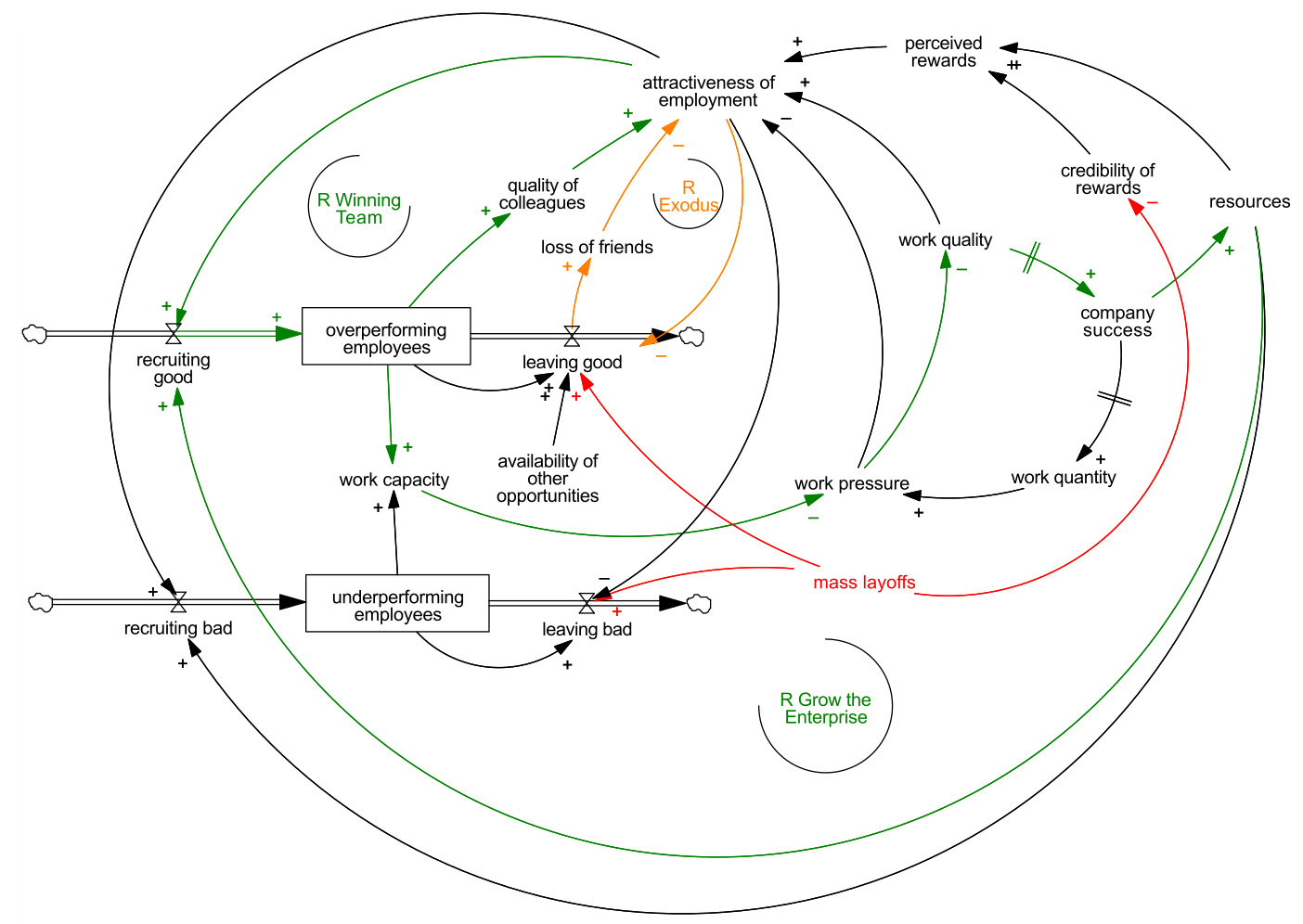

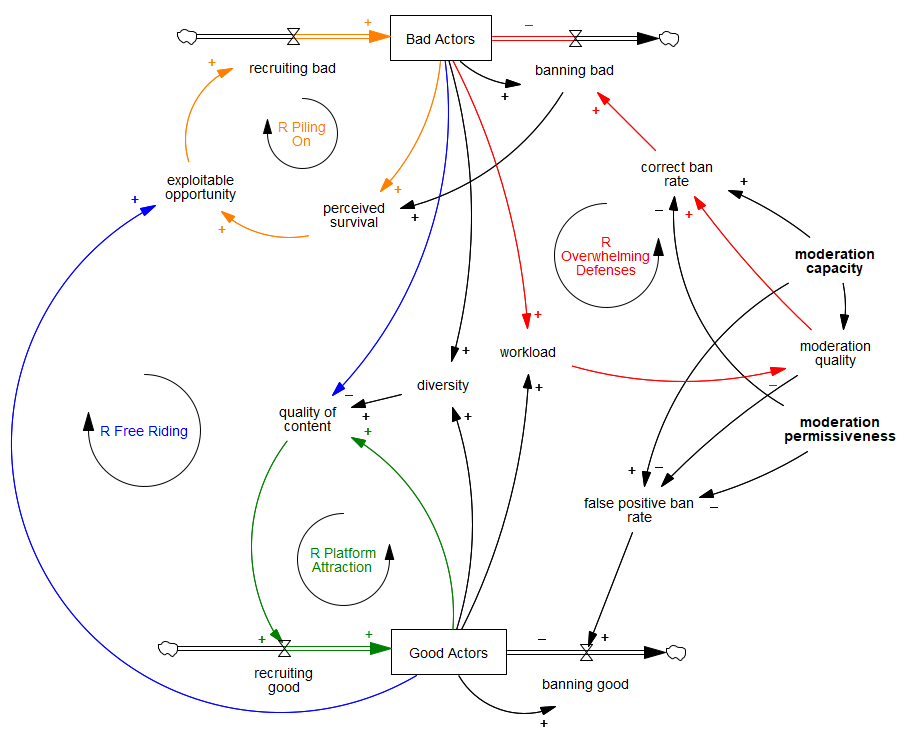

How far this fire spreads depends on how it impacts customers. The initial mass layoffs and reinforcing departures seem to have had a big impact on moderation capacity. That triggers a couple more vicious cycles. With moderation capacity down, bad actors last longer on the platform, increasing moderation workload. Higher workload and lower capacity decreases quality of moderation, so the removal of bad accounts falls more (red). As this happens, other potential bad actors observe the opportunity and move into the breach (orange).

There are some aspects of this subsystem that I found difficult to deal with on a CLD. The primary questions are of “good and bad from whose perspective,” and whether greater intentional permissiveness offsets diminished moderation capacity. I think there are some legitimate arguments for permitting more latitude (“sunshine is the best remedy”) but also compelling arguments for continued proscription of certain behavior (violence for example). The credibility of policy changes so far, such as they can be determined, is undermined by the irony of the immediate crackdown on freedom to criticize the boss.

One key feature not shown here is how advertisers view all this. They’re the revenue driver after all. So far they seem to fear the increase in turbulence and controversy, even if it brings diversity and engagement.That’s bad, because it’s another vicious cycle (roughly, less revenue -> less capacity -> more conflict -> less revenue).

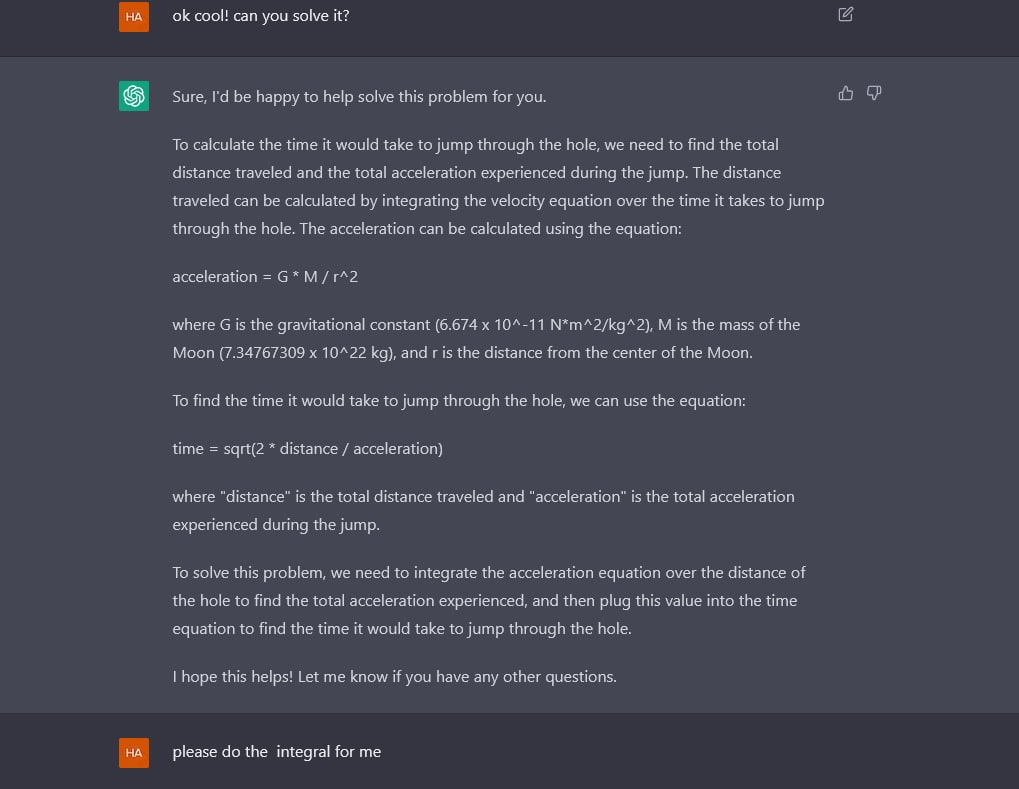

Account holders might become more of a revenue driver, but the initial rollout of the $8 verification idea was badly botched – presumably in part because of the simultaneous mass reduction in organizational capacity. This is unfortunate, because reducing anonymity might be a good way of promoting quality information through accountability.

The alternative, if Twitter collapses, is not entirely appetizing. Other big platforms aren’t exactly paragons of freedom or civility, and alternatives like Mastodon that provide more self-moderation capacity probably also enhance the insularity of filter bubbles.

I’m wondering again, (how) Should Systems Thinkers be on Social Media?

Related:

AI is killing us now

The danger of path-dependent information flows on the web

Encouraging Moderation