Last week Nature editorialized,

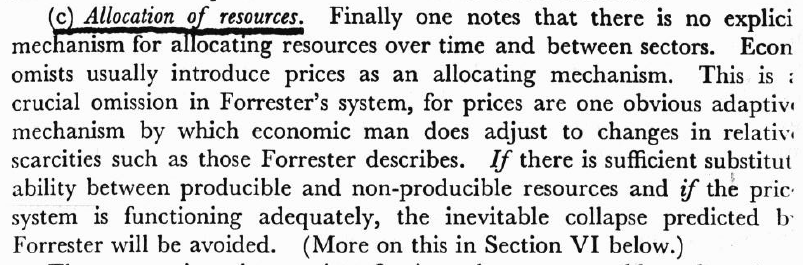

Are there limits to economic growth? It’s time to call time on a 50-year argument

Fifty years ago this month, the System Dynamics group at the Massachusetts Institute of Technology in Cambridge had a stark message for the world: continued economic and population growth would deplete Earth’s resources and lead to global economic collapse by 2070. This finding was from their 200-page book The Limits to Growth, one of the first modelling studies to forecast the environmental and social impacts of industrialization.

For its time, this was a shocking forecast, and it did not go down well. Nature called the study “another whiff of doomsday” (see Nature 236, 47–49; 1972). It was near-heresy, even in research circles, to suggest that some of the foundations of industrial civilization — mining coal, making steel, drilling for oil and spraying crops with fertilizers — might cause lasting damage. Research leaders accepted that industry pollutes air and water, but considered such damage reversible. Those trained in a pre-computing age were also sceptical of modelling, and advocated that technology would come to the planet’s rescue. Zoologist Solly Zuckerman, a former chief scientific adviser to the UK government, said: “Whatever computers may say about the future, there is nothing in the past which gives any credence whatever to the view that human ingenuity cannot in time circumvent material human difficulties.”

“Another Whiff of Doomsday” (unpaywalled: Nature whiff of doomsday 236047a0.pdf) was likely penned by Nature editor John Maddox, who wrote in his 1972 book, the Doomsday Syndrome,

“Tiny though the earth may appear from the moon, it is in reality an enormous object. The atmosphere of the earth alone weighs more than 5,000 million million tons, more than a million tons of air for each human being now alive. The water on the surface of the earth weights more than 300 times as much – in other words, each living person’s share of the water would just about fill a cube half a mile in each direction… It is not entirely out of the question that human intervention could at some stage bring changes, but for the time being the vast scale on which the earth is built should be a great comfort. In other words, the analogy of space-ship earth is probably not yet applicable to the real world. Human activity, spectacular though it may be, is still dwarfed by the human environment.”

Reciting the scale of earth’s resources hasn’t held up well as a counterargument to Limits., for the reason given by Forrester and Meadows et al. at the time: exponential growth approaches any finite limit in a relatively small number of doublings. The Nature editors were clearly aware of this back in ’72, but ignored its implications:

Instead, they subscribed to a “smooth approach” view, in which “a kind of restraint” limits population all by itself:

Instead, they subscribed to a “smooth approach” view, in which “a kind of restraint” limits population all by itself:

There are a lot of problems with this reasoning, not least of which is that economic activity is growing faster than population, yet there is no historic analog of the demographic transition for economies. However, I think the most fundamental problem with the editors’ mental model is that it’s effectively first order. Population is the only stock of interest; to the extent that they mention resources and pollution, it is only to propose that prices and preferences will take care of them. There’s no consideration of the possibility of a laissez-faire demographic transition resulting in absolute levels of population and economic activity requiring resource withdrawals that deplete resources and saturate sinks, leading to eventual overshoot and collapse. I’m reminded of Jay Forrester’s frequent comment, to the effect of, “if you have a model, you’ll be the only person in the room who can speak for 20 minutes without self-contradiction.” The ’72 Nature editorial clearly suffers for lack of a model.

There are a lot of problems with this reasoning, not least of which is that economic activity is growing faster than population, yet there is no historic analog of the demographic transition for economies. However, I think the most fundamental problem with the editors’ mental model is that it’s effectively first order. Population is the only stock of interest; to the extent that they mention resources and pollution, it is only to propose that prices and preferences will take care of them. There’s no consideration of the possibility of a laissez-faire demographic transition resulting in absolute levels of population and economic activity requiring resource withdrawals that deplete resources and saturate sinks, leading to eventual overshoot and collapse. I’m reminded of Jay Forrester’s frequent comment, to the effect of, “if you have a model, you’ll be the only person in the room who can speak for 20 minutes without self-contradiction.” The ’72 Nature editorial clearly suffers for lack of a model.

While the ’22 editorial at last acknowledges the existence of the problem, its prescription is “more research.”

Researchers must try to resolve a dispute on the best way to use and care for Earth’s resources.

…

But the debates haven’t stopped. Although there’s now a consensus that human activities have irreversible environmental effects, researchers disagree on the solutions — especially if that involves curbing economic growth. That disagreement is impeding action. It’s time for researchers to end their debate. The world needs them to focus on the greater goals of stopping catastrophic environmental destruction and improving well-being.

…

… green-growth and post-growth scientists need to see the bigger picture. Right now, both are articulating different visions to policymakers, and there is a risk this will delay action. In 1972, there was still time to debate, and less urgency to act. Now, the world is running out of time.

If there’s disagreement about the solution, then the solution should be distributed, so that we can learn from different approaches. It’s easy to verify success, by checking the equilibrium conditions for sources and sinks: as long as they’re in decline, policies need to adjust. However, I don’t think lack of agreement about the solution is the real problem.

The real problem is that the research “consensus that human activities have irreversible environmental effects” has no counterpart in the political and economic spheres. Neither green-growth nor degrowth has de facto support. This is not a problem that will be solved by more environmental or economic research.

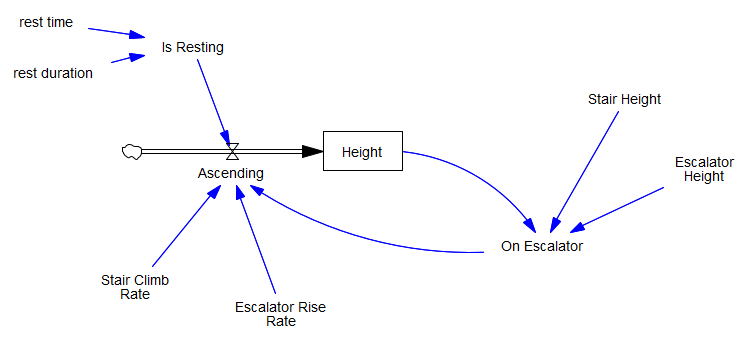

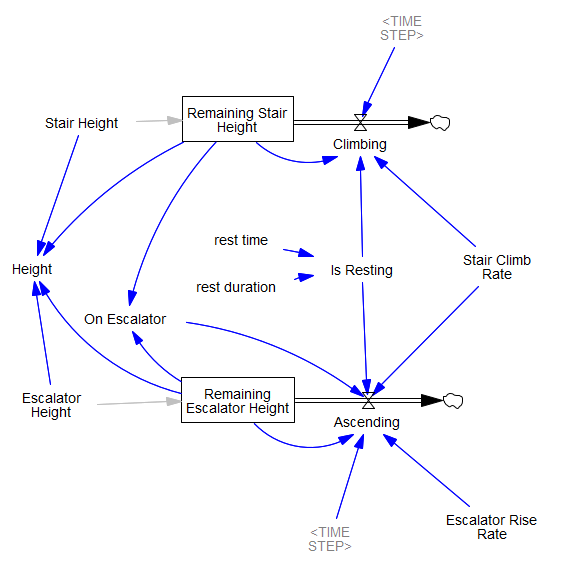

The logical loop is still there, and the rest of the accounting is more complex, so I think it’s inevitable.

The logical loop is still there, and the rest of the accounting is more complex, so I think it’s inevitable.